In this article, Paul McCafferty, SVP of Research & Development, Edge Intelligence, talks about the proliferation of real-time machine-based and sensor-derived data, and the need to reimagine how data should be gathered, stored, accessed and analysed on a global scale.

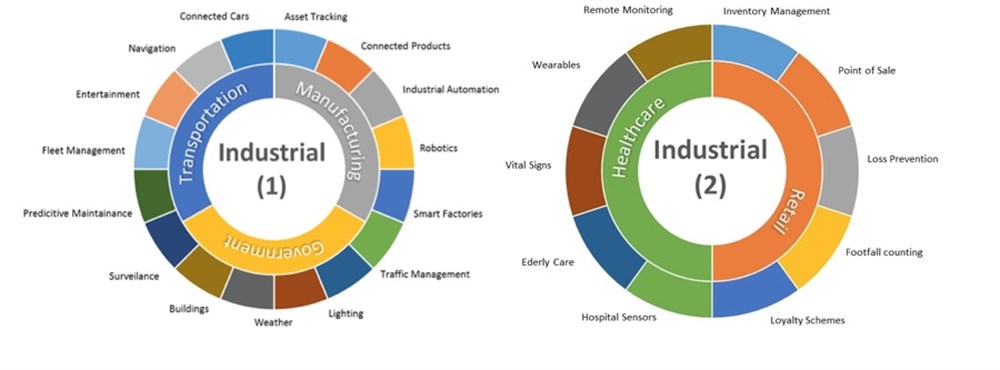

The adoption of the Internet of Things has spawned a proliferation of data such that the world has never experienced. Everything from consumer wearables and self-driving cars are generating increasingly complex data at a rapid pace. The implications of IoT data on business, including manufacturing, transportation and retail—as well as hundreds of sub-segments—bring with them inherent risks and challenges that cannot be solved by the status quo IT architecture. Closing out 2017, two-thirds of the CEOs of Global 2000 companies placed digital transformation at the center of their corporate strategy. Thus, the resulting reams of data (think petabytes) have created the need to reimagine how data should be gathered, stored, accessed and analysed on a global scale.

IoT Data Considerations for Engineering and Industrial Manufacturing

Commercialising IoT technology has created opportunities for mainstream manufacturers and businesses of all stripes to take advantage of the data-output from their products and processes. Many IoT advancements in engineering are aimed at supply chain improvements (e.g. cost reduction and efficiency) while the IoT opportunities in industrial manufacturing center around developing product enhancements and service improvements based on the automatic and ongoing real-time generation of machine-based and sensor-derived data.

For example, a single aircraft with a modern engine generates approximately 2-5 TB of data each day, depending on the number of flights it makes. Similarly, autonomous cars, which may have more than 100 individual sensors, generate terabytes per hour driven. Modern manufacturing facilities generate data at every point in the production process, which informs production planning and an intelligence-enhanced workflow. Offshore oil rigs produce oil—and data—by the barrel, with upwards of 30,000 separate data sensors generating a continuous stream of data.

In all cases, the data that is produced can have a material impact on production processes, maintenance schedules and product improvements by providing engineers with near-real time data on routes, speeds, engine performance, component wear and tear and even contextual factors such as weather conditions. Airline pilot behaviour data can also be analysed to monitor performance, efficiency and safety. The edge makes this possible. Distributing data to a relatively low-cost “edge” device (e.g. commodity compute and storage resources) located within an airport terminal gives aircraft manufacturers the ability to analyse and compare data more quickly than ever before. The immediacy of edge intelligence enables automated feedback loops in the manufacturing process as well as predictive maintenance plans that can maximise the up-time and lifespan of equipment and assembly lines.

A New Category of Computing: The Edge of the Network

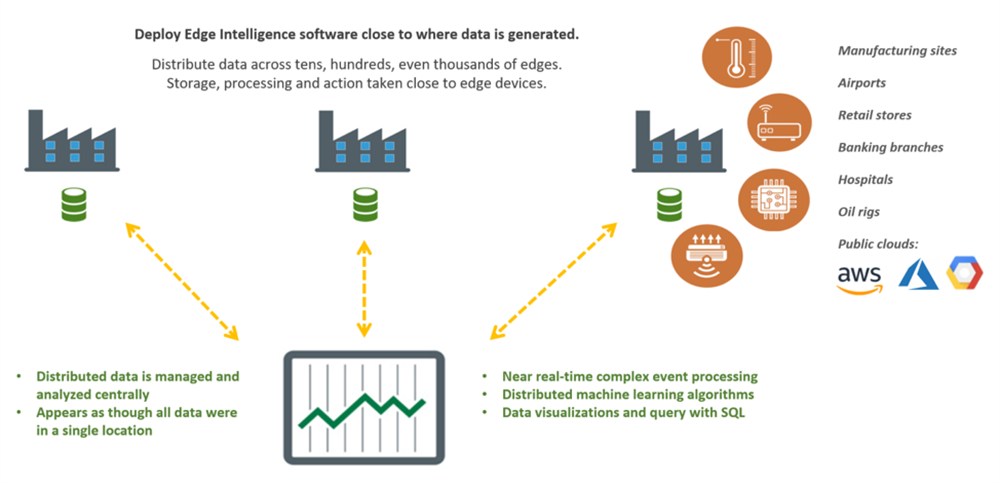

An edge device is one that enables a local user to connect and transfer data to a network by serving as an entry point into the network. The edges are components such as sensors and devices that produce data automatically. Most IoT “things” are edges as well—and there are a lot of them! Gartner has predicted that by 2020, there will be over 20 billion things connected to the Internet.

Remote data-producing environments exist in every industry, and the need to gather, manage and analyse this data is critical to creating and maintaining efficiency and business advantage. The edges of the network have become the place where most data are produced. In industrial environments the amount of data produced at the edge is staggering, but because of its volume and complexity, much of it is not used. In fact, 99.9% of data produced at connected factories (1 Petabyte per day) are not analyzed at all, according to a recent report by Cisco.

Current Big Data Architectures Cannot Support IoT

Centralised data models no longer support IoT for a number of reasons. Moving data once it has been gathered in a central location presents technical, security, geo-political, timing and cost challenges. Network bandwidth limitations and latency issues create bottlenecks between data gathering and data use. The need to enforce security policies with regard to the types of data, place or origin and ultimate use also create time-lags, due to the number of governing authorities and regulations that must be satisfied and documented prior to accessing specific data, if it can be moved at all. Insufficient bandwidth also contributes to the problem: moving 1TB of data from a local site to a central cloud or data center using a high‐speed internet connection (50MB/sec) would take more than five hours. Not enough centralised capacity to load and process all the data produced at numerous edges can also hinder business’ ability to create value from their data. And, data transportation costs can be prohibitive as well: the cost of bulk shipping data can be astronomical, given the amount of data and the constancy with which it is created. In fact, new data generates faster than it can be shipped.

The traditional data warehouse (on-premise or cloud-based) doesn’t work in this new data-driven world order: inefficient, incomplete and costly access to data diminishes its value. Solutions like the public cloud and private data warehouses do not solve these problems, as data remains centralised in one geography, and therefore constrained for all the reasons noted above. For these reasons, and many more, IDC Research predicts that by 2019, at least 40% of IoT-created data will be stored, processed, analysed and acted upon close to or at the edge of the network.

Data Analysis is the Key to Business Advantage

Moving beyond data aggregation and storage to the true value of the data itself, relies upon powerful and timely data analysis. With 94% of enterprise decision makers saying that analytics are important or critical to their digital transformation efforts, Forrester Research asserts that analysing data from IoT devices is critical to extract business value and warns that many efforts will fail due to data silos and architecture constraints.

Simply put, the business value of the IoT is created by analyzing the data, drawing insights, and improving actions and outcomes. Current data warehouse configurations and fixed-field queries fall short because centralised analytics can’t deliver insights and support actions in a timely and relevant way. Just as the volume of data has exploded with the IoT, so too has the number of data types and the complexity of the analysis needed to gain important insights that become critical to creating business advantage. True edge computing supports every form of analytics, including pre‐defined analytics, ad‐hoc analytics, self‐service analytics and data science. The ability to analyse high volumes of IoT data in a timely way and create and analyse varying types of data is at the core of digital transformation.

Complex Event Processing and Machine Learning: The Edge is the Advantage

The proliferation of sensor networks and smart devices has created a continuous data stream that often must be assimilated with legacy data and analysed in near real-time so that businesses can make decisions based on up-to-date intelligence delivered from the edge. The ease with which an edge architecture can conduct complex event processing in near real-time at the edge equates to business advantage. The benefit of doing machine learning at the edge is time—working with the latest set of data—as well as logistics (not having to move a dataset to a system that runs data against machine learning algorithms). The edge adds efficiency, eliminating the need to transfer data to a cloud-based warehouse and then transfer it again to analyse it for machine learning.

Complex event processing also has additional features that result in cleaner data, improved efficiency and more actionable results. CEP at the edge manages continuous feeds of multiple data types and provides pre-aggregation; matches incoming data against black lists; detects patterns and identifies anomalous conditions and delivers temporal results.

When a company has the flexibility to execute in near real-time complex event processing and machine learning algorithms, combined with querying large historical volumes of data for forensics, aggregate reporting other types of visualizations, intelligence and insights, they have realised the true value of edge computing.

Visit www.edgeintelligence.com to learn more and download The Next Wave of Analytics – At the Edge: A Distributed Analytical Platform for All Analytics, authored by Rick F. van der Lans, Business Intelligence Analyst, R20/Consultancy.